Traditional error tracking feels like trying to solve a puzzle with half the pieces missing. You get a stack trace, maybe some context about the error, and then you’re left to piece together what actually went wrong. Most developers know this dance - digging through logs, recreating conditions, and hoping to catch the error in action.

The introduction of Large Language Models into Sentry’s error analysis pipeline changes this familiar but frustrating dynamic. Instead of just showing you where the code broke, it helps you understand why it broke and how to fix it properly.

ReferenceError: sa_event is not defined

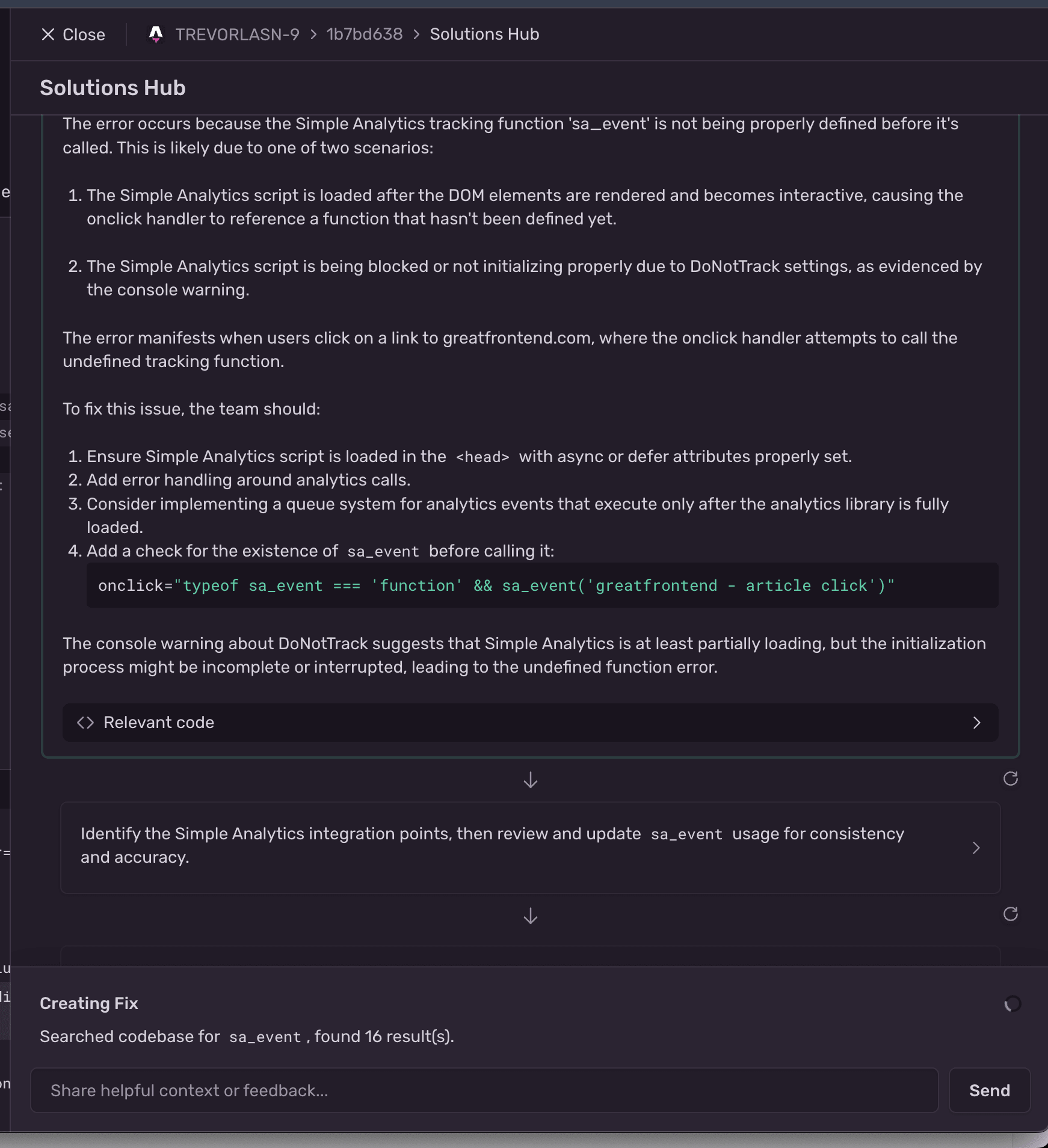

Take a common yet frustrating scenario: analytics tracking fails silently in production. Specifically, a ReferenceError tells us that sa_event isn’t defined. Traditional error tracking would stop here, leaving us to figure out if this is a loading issue, a scope problem, or something else entirely.

Sentry’s LLM constructs a comprehensive mental model of the application’s state and potential failure modes. It recognizes that the missing 'sa_event' function isn’t just a random undefined variable - it’s a crucial part of an analytics integration with specific initialization requirements and timing considerations.

The LLM identifies subtle timing issues between script loading and DOM rendering as a potential root cause, connecting this to browser privacy features and recognizing how DoNotTrack settings might interfere with the analytics initialization process. This level of analysis mirrors the thought process of an experienced developer who understands not just the code, but the broader ecosystem in which it operates.

The proposed solution integrates multiple layers of defense: proper script loading strategies with async/defer attributes, runtime existence checks for critical functions, and a queuing mechanism for event handling. I love this approach that recognizes that robust error handling isn’t about fixing a single point of failure, but about building resilient apps that can handle various edge cases and failure modes.

Sentry’s implementation of LLM technology signals a broader shift in the evolution of developer tools.

We’re moving from tools that simply report problems to intelligent platforms that can reason about code behavior and suggest architectural improvements. This is particularly significant for web development, where applications need to gracefully handle a wide range of runtime environments and user privacy settings.

Use The Right Tool, But Don’t Forget The Basics

While Sentry’s LLM integration shows promise, we need to approach these AI-powered solutions with a healthy dose of skepticism. The current implementation, though impressive in its analysis of reference errors and initialization issues, might struggle with more complex scenarios.

When an LLM suggests adding error handling or implementing a queue system, there’s a risk that developers might blindly implement these solutions without grasping why they’re necessary. This could lead to cargo-cult programming where patterns are copied without understanding their purpose or implications.

Despite these valid concerns, Sentry’s LLM integration represents a significant step forward in developer tooling. The ability to quickly analyze errors and provide context-aware solutions saves valuable development time while potentially teaching developers about best practices and system design.

The key lies in using these AI-powered insights as a complement to, rather than a replacement for, developer expertise. When used thoughtfully, these tools can elevate our debugging practices and allow us to focus on more complex architectural decisions.

As the technology continues to evolve, we might look back at this moment as the beginning of a new era in software development - one where AI and human expertise work together to create more reliable, maintainable systems.

Overall, I’m excited to see how Sentry’s LLM integration evolves and how it shapes the future of error debugging. While it might not solve every problem, it’s a promising step towards making error tracking smarter, more efficient, and ultimately more enjoyable for developers.